Notes from "Lectures on Physics" by Richard Feynman. Mechanics, teory of gravitation, general relativity, optics, electromagnetism, quantum mechanics, thermodynamics. We refer the reader to "The complete lectures on Physics" for the full text.

Contents

- 1 Volume I

- 1.1 Atoms in Motion

- 1.2 The relation of physics to other sciences

- 1.3 Conservation of energy

- 1.4 What is energy

- 1.5 Time and Distance

- 1.6 Probability

- 1.7 Newton's laws of dynamics

- 1.7.1 Momentum and forces

- 1.7.2 Newton third law

- 1.7.3 Conservation of momentum

- 1.7.4 Relativistic momentum

- 1.7.5 Symmetry in Physics

- 1.7.6 Friction

- 1.7.7 Molecular forces

- 1.7.8 Fundamental forces fields

- 1.7.9 Pseudo forces

- 1.7.10 Nuclear forces

- 1.7.11 Energy of a falling body

- 1.7.12 Conservative forces

- 1.7.13 The principle of relativity

- 1.7.14 The Lorentz transformation

- 1.7.15 The Michelson-Morley experiment

- 1.7.16 The transformation of time

- 1.7.17 Simultaneisty

- 1.7.18 Relativistic mechanics

- 1.7.19 The twin paradox

- 1.7.20 Transformation of velocities

- 1.8 Rotations

- 1.9 The harmonic oscillator and resonance

- 1.10 Optics: the principle of least time

- 1.11 Geometrical optics

- 1.12 Electromagnetic radiation

- 1.13 Interference

- 1.14 Diffraction

- 1.15 The origin of the refractive index

- 1.16 Relativistic effects of radiation

- 1.17 Quantum behaviour

- 1.18 The Kinetic Theory of gases

- 1.19 The principles of statitical mechanics

- 1.20 The Laws of Thermodynamics

Volume I

Atoms in Motion

Atoms in Motion

Matter is made of atoms

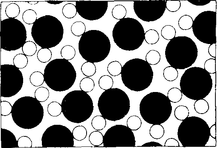

All things are made of atoms—little particles that move around in perpetual motion, attracting each other when they are a little distance apart, but repelling upon being squeezed into one another.

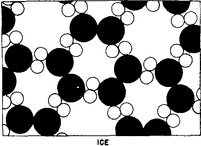

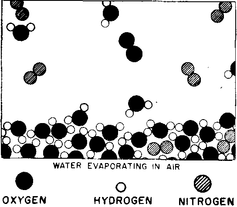

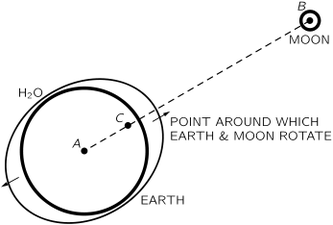

This is a picture of water magnified a billion times, but idealized in several ways. There are two kinds of "blobs" or circles to represent the atoms of oxygen (black) and hydrogen (white), and that each oxygen has two hydrogens tied to it. (Each little group of an oxygen with its two hydrogens is called a molecule.)

The particles are "stuck together"—that they attract each other, this one pulled by that one, etc. The whole group is "glued together," so to speak. On the other hand, the particles do not squeeze through each other. If you try to squeeze two of them too close together, they repel.

The atoms are 1 or cm in radius. Now

cm is called an angstrom (just as another name), so we say they are 1 or 2 angstroms (Å) in radius. Another way to remember their size is this: if an apple is magnified to the size of the earth, then the atoms in the apple are approximately the size of the original apple.

The jiggling motion is what we represent as heat: when we increase the temperature, we increase the motion. If we heat the water, the jiggling increases and the volume between the atoms increases, and if the heating continues there comes a time when the pull between the molecules is not enough to hold them together and they do fly apart and become separated from one another. Of course, this is how we manufacture steam out of water—by increasing the temperature; the particles fly apart because of the increased motion.

At ordinary atmospheric pressure there might be only a few molecules in a whole room.

The molecules, being separated from one another, will bounce against the walls.

In order to confine a gas we must apply a pressure. The pressure times the area is the force. Clearly, the force is proportional to the area, for if we increase the area but keep the number of molecules per cubic centimeter the same, we increase the number of collisions with the piston in the same proportion as the area was increased.

Now let us put twice as many molecules in this tank, so as to double the den- sity, and let them have the same speed, i.e., the same temperature. Then, to a close approximation, the number of collisions will be doubled, and since each will be just as "energetic" as before, the pressure is proportional to the density.

If we increase the temperature without changing the density of the gas, i.e., if we increase the speed of the atoms, what is going to happen to the pressure? Well, the atoms hit harder because they are moving faster, and in addition they hit more often, so the pressure increases.

When we compress a gas slowly, the temperature of the gas increases.

What will happen at very low temperatures is indicated in Fig. 1-4: the molecules lock into a new pattern which is ice. The interesting point is that the material has a definite place for every atom.

The difference between solids and liquids is, then, that in a solid the atoms are arranged in some kind of an array, called a crystalline array, and they do not have a random position at long distances; the position of the atoms on one side of the crystal is determined by that of other atoms millions of atoms away on the other side of the crystal.

there is a part of the symmetry that is hexagonal. You can see that if we turn the picture around an axis by 120°, the picture returns to itself. So there is a symmetry in the ice which accounts for the six-sided appearance of snowflakes. Another thing we can see from Fig. 1-4 is why ice shrinks when it melts. The particular crystal pattern of ice shown here has many "holes" in it, as does the true ice structure. When the organization breaks down, these holes can be occupied by molecules. Most simple substances, with the exception of water and type metal, expand upon melting, because the atoms are closely packed in the solid crystal and upon melting need more room to jiggle around, but an open structure collapses, as in the case of water.

Helium, even at absolute zero, does not freeze, unless the pressure is made so great as to make the atoms squash together. If we increase the pressure, we can make it solidify.

Atomics processes

The molecules in the water are always jiggling around. From time to time, one on the surface happens to be hit a little harder than usual, and gets knocked away.

Thus, molecule by molecule, the water disappears— it evaporates. An ion is an atom which either has a few extra electrons or has lost a few electrons. In a salt crystal we find chlorine ions (chlorine atoms with an extra electron) and sodium ions (sodium atoms with one electron missing). The ions all stick together by electrical attraction in the solid salt, but when we put them in the water we find, because of the attractions of the negative oxygen and positive hydrogen for the ions, that some of the ions jiggle loose.

By equilibrium we mean that situation in which the rate at which atoms are leaving just matches the rate at which they are coming back. If there is almost no salt in the water, more atoms leave than return, and the salt dissolves. If, on the other hand, there are too many "salt atoms," more return than leave, and the salt is crystallizing.

Chemical reactions

In the case of oxygen, two oxygen atoms stick together very strongly.

Atoms are very special: they like certain particular partners, certain particular directions, and so on.

The carbon atoms are supposed to be in a solid crystal (which could be graphite or diamond*). Now, for example, one of the oxygen molecules can come over to the carbon, and each atom can pick up a carbon atom and go flying off in a new combination—"carbon-oxygen"—which is a molecule of the gas called carbon monoxide. It is given the chemical name CO.

Carbon attracts oxygen much more than oxygen attracts oxygen or carbon attracts carbon. Therefore in this process the oxygen may arrive with only a little energy, but the oxygen and carbon will snap together with a tremendous vengeance and commotion, and everything near them will pick up the energy. A large amount of motion energy, kinetic energy, is thus generated. This of course is burning.

Every substance is some type of arrangement of atoms.

We know that the carbon dioxide molecule is straight and symmetrical: O—C—O.

The most important hypothesis in all of biology, for example, is that everything that animals do, atoms do. In other words, there is nothing that living things do that cannot be understood from the point of view that they are made of atoms acting according to the laws of physics.

Basic Physics

Physics before 1920

All things, even ourselves, are made of fine-grained, enormously strongly interacting plus and minus parts, all neatly balanced out.

With this picture the atoms were easier to understand. They were thought to have a "nucleus" at the center, which is positively electrically charged and very massive, and the nucleus is surrounded by a certain number of "electrons" which are very light and negatively charged. Now we go a little ahead in our story to remark that in the nucleus itself there were found two kinds of particles, protons and neutrons, almost of the same weight and very heavy. The protons are elec- trically charged and the neutrons are neutral. If we have an atom with six protons inside its nucleus, and this is surrounded by six electrons (the negative particles in the ordinary world of matter are all electrons, and these are very light compared with the protons and neutrons which make nuclei), this would be atom number six in the chemical table, and it is called carbon. Atom number eight is called oxygen, etc., because the chemical properties depend upon the electrons on the outside, and in fact only upon how many electrons there are.

the existence of the positive charge, in some sense, distorts, or creates a "condition" in space, so that when we put the negative charge in, it feels a force. This potentiality for produc- ing a force is called an electric field. When we put an electron in an electric field, we say it is "pulled." We then have two rules: (a) charges make a field, and (b) charges in fields have forces on them and move.

Magnetic influences have to do with charges in relative motion, so magnetic forces and electric forces can really be attributed to one field, as two different aspects of exactly the same thing.

Although the forces between two charged objects should go inversely as the square of the distance, it is found, when we shake a charge, that the influence extends very much farther out than we would guess at first sight. That is, the effect falls off more slowly than the inverse square.

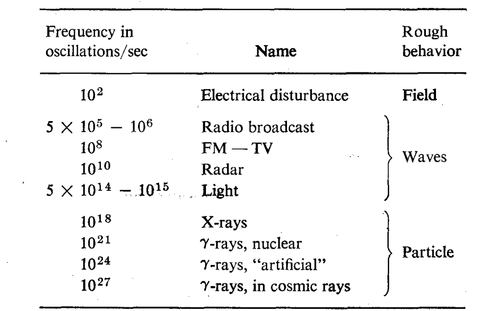

The only thing that is really different from one wave to another is the frequency of oscillation.

The usual "pickup" that we get from electric currents in the circuits in the walls of a building have a frequency of about one hundred cycles per second. If we increase the frequency to 500 or 1000 kilocycles (1 kilocycle = 1000 cycles) per second, we are "on the air," for this is the frequency range which is used for radio broadcasts. (Of course it has nothing to do with the air! We can have radio broadcasts without any air.) If we again increase the frequency, we come into the range that is used for FM and TV. Going still further, we use certain short waves, for example for radar. Still higher, and we do not need an instrument to "see" the stuff, we can see it with the human eye. In the range of frequency from

Quantum Physics

The mechanical rules of "inertia" and "forces" are wrong--Newton's laws are wrong--in the world of atoms. Instead, it was discovered that things on a small scale behave nothing like things on a large scale. That is what makes physics difficult—and very interesting. It is hard because the way things behave on a small scale is so "unnatural"; we have no direct experience with it.

Quantum mechanics has many aspects. In the first place, the idea that a particle has a definite location and a definite speed is no longer allowed; that is wrong. To give an example of how wrong classical physics is, there is a rule in quantum mechanics that says that one cannot know both where something is and how fast it is moving.

An atom has a diameter of about

There is no distinction between a wave and a particle. So quantum mechanics unifies the idea of the field and its waves, and the particles, all into one. Now it is true that when the frequency is low, the field aspect of the phenomenon is more evident, or more useful as an approximate description in terms of everyday experiences. But as the frequency increases, the particle aspects of the phenomenon become more evident with the equipment with which we usually make the measurements.

We have a new kind of particle to add to the electron, the proton, and the neutron. That new particle is called a photon. The new view of the interaction of electrons and protons that is electromagnetic theory, but with everything quantum-mechanically correct, is called quantum electrodynamics. This fundamental theory of the interaction of light and matter, or electric field and charges, is our greatest success so far in physics. In this one theory we have the basic rules for all ordinary phenomena except for gravitation and nuclear processes. For example, out of quantum electro- dynamics come all known electrical, mechanical, and chemical laws: the laws for the collision of billiard balls, the motions of wires in magnetic fields, the specific heat of carbon monoxide, the color of neon signs, the density of salt, and the reactions of hydrogen and oxygen to make water are all consequences of this one law.

Quantum electrodynamics tells the properties of very high-energy photons, gamma rays, etc. It predicted another very re- markable thing: besides the electron, there should be another particle of the same mass, but of opposite charge, called a positron, and these two, coming to- gether, could annihilate each other with the emission of light or gamma rays. (After all, light and gamma rays are all the same, they are just different points on a frequency scale.) The generalization of this, that for each particle there is an antiparticle, turns out to be true. In the case of electrons, the antiparticle has another name—it is called a positron, but for most other particles, it is called anti- so-and-so, like antiproton or antineutron.

Nuclei and particles

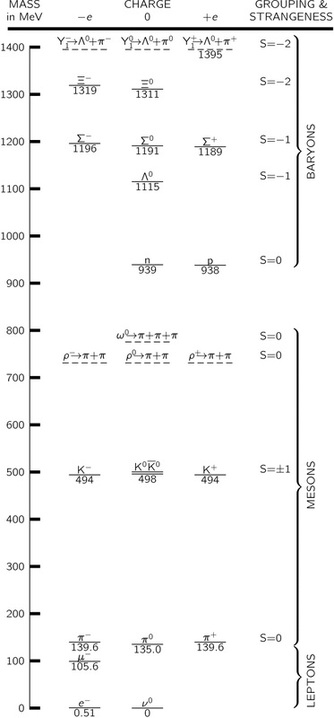

One such chart of the new particles was made independently by Gell-Mann in the U.S.A. and Nishijima in Japan. The basis of their classification is a new number, like the electric charge, which can be assigned to each particle, called its "strangeness," S. This number is conserved, like the electric charge, in reactions which take place by nuclear forces.

In Table 2-2 are listed all the particles. We cannot discuss them much at this stage, but the table will at least show you how much we do not know. Under- neath each particle its mass is given in a certain unit, called the Mev. One Mev is equal to gram. The reason this unit was chosen is historical, and we shall not go into it now. More massive particles are put higher up on the chart; we see that a neutron and a proton have almost the same mass. In vertical columns we have put the particles with the same electrical charge, all neutral objects in one column, all positively charged ones to the right of this one, and all negatively charged objects to the left.

Particles are shown with a solid line and "resonances" with a dashed one.

Although all of the particles except the electron, neutrino, photon, graviton, and proton are unstable, decay products have been shown only for the resonances. Strangeness assignments are not applicable for leptons, since they do not interact strongly with nuclei.

All particles which are together with the neutrons and protons are called baryons,

In addition to the baryons the other particles which are involved in the nuclear interaction are called mesons. There are first the pions, which come in three varie- ties, positive, negative, and neutral; they form another multiplet.

we are confronted with a large number of particles, which together seem to be the fundamental constituents of matter. Fortunately, these particles are not all different in their interactions with one another. In fact, there seem to be just four kinds of interaction between particles which, in the order of decreasing strength, are the nuclear force, electrical interactions, the beta-decay interaction,< and gravity. The photon is coupled to all charged particles and the strength of the interaction is measured by some number, which is 1/137. The detailed law of this coupling is known, that is quantum electrodynamics. •

* The "strength" is a dimensionless measure of the coupling constant involved in each interaction.

Gravity is coupled to all energy, but its coupling is extremely weak, much weaker than that of elec- tricity. This law is also known. Then there are the so-called weak decays— beta decay, which causes the neutron to disintegrate into proton, electron, and neutrino, relatively slowly. This law is only partly known. The so-called strong interaction, the meson-baryon interaction, has a strength of 1 in this scale, and the law is completely unknown, although there are a number of known rules, such as that the number of baryons does not change in any reaction.

Elementary Interactions

Coupling Strength*

Gravity

Weak decays

Electromagnetic

Mesons-baryons 1

The relation of physics to other sciences

Biology

If we have one substance and another very similar substance, the one does not just turn into the other, because the two forms are usually separated by an energy barrier or "hill." Consider this analogy: If we wanted to take an object from one place to another, at the same level but on the other side of a hill, we could push it over the top, but to do so requires the addition of some energy. Thus most chemical reactions do not occur, because there is what is called an activa- tion energy in the way. In order to add an extra atom to our chemical requires that we get it close enough that some rearrangement can occur; then it will stick. But if we cannot give it enough energy to get it close enough, it will not go to com- pletion, it will just go part way up the "hill" and back down again. However, if we could literally take the molecules in our hands and push and pull the atoms around in such a way as to open a hole to let the new atom in, and then let it snap back, we would have found another way, around the hill, which would not require extra energy, and the reaction would go easily. Now there actually are, in the cells, very large molecules, much larger than the ones whose changes we have been de- scribing, which in some complicated way hold the smaller molecules just right, so that the reaction can occur easily. These very large and complicated things are called enzymes. (They were first called ferments, because they were originally discovered in the fermentation of sugar. In fact, some of the first reactions in the cycle were discovered there.) In the presence of an enzyme the reaction will go.

An enzyme is made of another substance called protein. Enzymes are very big and complicated, and each one is different, each being built to control a certain special reaction.

We emphasize that the enzymes themselves are not involved in the reaction directly. They do not change; they merely let an atom go from one place to another. Having done so, the enzyme is ready to do it to the next molecule, like a machine in a factory.

All proteins are not enzymes, but all enzymes are proteins. However, proteins are a very characteristic substance of life: first of all they make up all the enzymes, and second, they make up much of the rest of living material. Proteins have a very interesting and simple structure. They are a series, or chain, of different ammo acids. There are twenty different amino acids, and they all can combine with each other to form chains in which the backbone is CO-NH, etc. Proteins are nothing but chains of various ones of these twenty aminoacids. Each of the amino acids probably serves some special purpose. A red-eyed fly makes a red-eyed fly baby, and so the information for the whole pattern of enzymes to make red pigment must be passed from one fly to the next. This is done by a substance in the nucleus of the cell, not a protein, called DNA (short for des- oxyribose nucleic acid). This is the key substance which is passed from one cell to another (for instance, sperm cells consist mostly of DNA) and carries the information as to how to make the enzymes. DNA is the "blueprint."

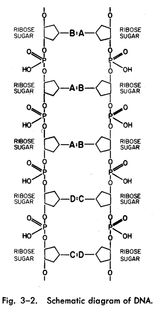

The DNA molecule is a pair of chains, twisted upon each other. The backbone of each of these chains, which are analogous to the chains of proteins but chemically quite different, is a series of sugar and phosphate groups, as shown in Fig. 3-2. Now we see how the chain can contain instructions, for if we could split this chain down the middle, we would have a series BAADC . . . and every living thing could have a different series. Thus perhaps, in some way, the specific instructions for the manufacture of pro-teins are contained in the specific series of the DNA.

there are four kinds, called adenine, thymine, cytosine, and guanine, but let us call them A, B, C, and D. The interesting thing is that only certain pairs can sit opposite each other, for example A with B and C with D.

Conservation of energy

What is energy

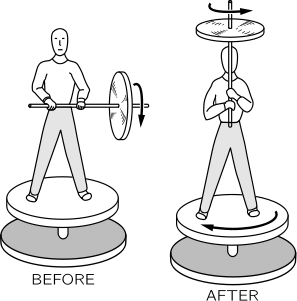

In quantum mechanics it turns out that the conservation of energy is very closely related to another important property of the world, things do not depend on the absolute time. We can set up an experiment at a given moment and try it out, and then do the same experiment at a later moment, and it will behave in exactly the same way. Whether this is strictly true or not, we do not know. If we assume that it is true, and add the principles of quantum mechanics, then we can deduce the principle of the conservation of energy. The other conservation laws are also linked together. The conservation of momentum is associated in quantum mechanics with the proposition that it makes no difference where you do the experiment, the results will always be the same. As independence in space has to do with the conservation of momentum, independence of time has to do with the conservation of energy, and finally, if we turn our apparatus, this too makes no difference, and so the invariance of the world to angular orientation is related to the conservation of angular momentum. Besides these, there are three other conservation laws, that are exact so far as we can tell today, which are much simpler to understand because they are in the nature of counting blocks.

The first of the three is the conservation of charge, and that merely means that you count how many positive, minus how many negative electrical charges you have, and the number is never changed. You may get rid of a positive with a negative, but you do not create any net excess of positives over negatives. Two other laws are analogous to this one—one is called the conservation of baryons. There are a number of strange particles, a neutron and a proton are examples, which are called baryons. In any reaction whatever in nature, if we count how many baryons are coming into a process, the number of baryons which come out will be exactly the same. There is another law, the conservation of leptons. We can say that the group of particles called leptons are: electron, mu meson, and neutrino. There is an antielectron which is a positron, that is, a −1 lepton. Counting the total number of leptons in a reaction reveals that the number in and out never changes, at least so far as we know at present.

Time and Distance

Motion

Galileo's first experiments on motion were done by using his pulse to count off equal in- tervalsoftime. Letusdothesame.

We may count off beats of a pulse as the ball rolls down the track: "one .. . two ... three .. . four .. . five ... six ... seven . . . eight..." We ask a friend to make a small mark at the location of the ball at each count; we can then measure the distance the ball travelled from the point of release in one, or two, or three, etc., equal intervals of time. Galileo expressed the result of his observations in this way: if the location of the ball is marked at 1, 2, 3, 4,... units of time from the instant ofIts release, those marks are distant from the starting point in propor- tion to the numbers 1, 4, 9, 16, ... Today we would say the distance is proportional to the square of the time:

Time

Webster defines "a time" as "a period," and the latter as "a time".

Maybe it is just as well if we face the fact that time is one of the things we probably cannot define (in the dictionary sense), and just say that it is what we already know it to be:

What really matters anyway is not how we define time, but how we measure it.

We can just say that we base our definition of time on the repetition of some apparently periodic event.

Short times

Galileo decided that a given pendulum always swings back and forth in equal intervals of time so long as the size of the swing is kept small.

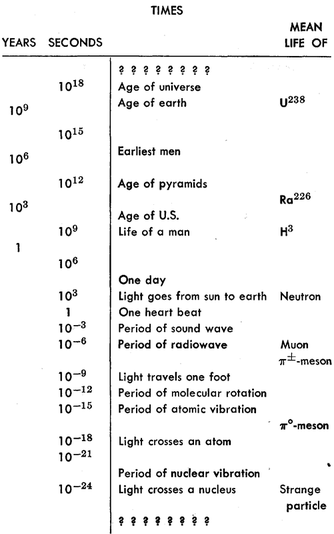

With modern electronic techniques, oscillators have been built with periods as short as about 10^-12 second, and they have been calibrated (by comparison methods such as we have described) in terms of our standard unit of time, the second.

Within the past few years, just such a technique was used to measure the lifetime of the π°-meson. By observing in a microscope the minute tracks left in a photographic emulsion in which π°-mesons had been created one saw that a π°-meson (known to be travelling at a certain speed nearly that of light) went a distance of about meter, on the average, before disintegrating. It lived for only about

sec.

Long times

We find that the radioactivity of a particular sample of material decreases by the same fraction for successive equal increases in its age.

We observe that if the radioactivity decreases to one-half in T days (called the "half-life"), then it decreases to one-quarter in another T days, and so on.

If we knew that a piece of material, say a piece of wood, had contained an amount A of radioactive material when it was formed, and we found out by a direct measurement that it now contains the amount B, we could compute the age of the object, t, by solving the equation

There are, fortunately, cases in which we can know the amount of radioactivity that was in an object when it was formed. We know, for example, that the carbon dioxide in the air contains a certain small fraction of the radioactive carbon isotope C14 (replenished continuously by the action of cosmic rays). If we measure the total carbon content of an object, we know that a certain fraction of that amount was originally the radioactive C14; we know, therefore, the starting amount A to use in the formula above. Carbon-14 has a half-life of 5000 years. By careful measurements we can measure the amount left after 20 half-lives or so and can therefore "date" organic objects which grew as long as 100,000 years ago.

Uranium, for example, has an isotope whose half-life is about 109 years, so that if some material was formed with uranium in it 10^9 years ago, only half the uranium would remain today. When the uranium disintegrates, it changes into lead. Consider a piece of rock which was formed a long time ago in some chemical process. Lead, being of a chemical nature different from uranium, would appear in one part of the rock and uranium would appear in another part of the rock. The uranium and lead would be separate. If we look at that piece of rock today, where there should only be uranium we will how find a certain fraction of uranium and a certain fraction of lead. By comparing these fractions, we can tell what percent of the uranium disappeared and changed into lead. By this method, the age of certain rocks has been determined to be several billion years.

It is now believed that at least our part of the universe had its beginning about ten or twelve billion years ago.

Units and standards of time

Recently we have been gaining experience with some natural oscillators which we now believe would provide a more constant time reference than the earth, and which are also based on a natural phenomenon available to everyone. These are the so-called "atomic clocks." Their basic internal period is that of an atomic vibration which is very insensitive to the temperature or any other external effects. These clocks keep time to an accuracy of one part in or better.

Large distances

We have found by experience that dis- tance can be measured in another fashion: by triangulation.

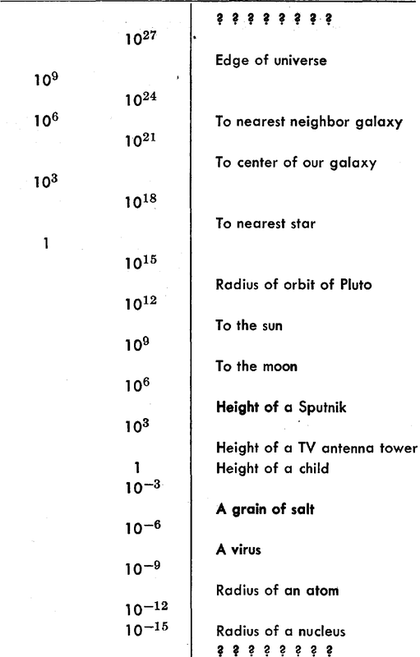

For example, we were able to use triangulation to measure the height of the first Sputnik. We found that it was roughly meters high. By more careful measurements the distance to the moon can be measured in the same way. Two telescopes at different places on the earth can give us the two angles we need. It has been found in this way that the moon is

meters away.

because the earth moving around the sun gives us a large baseline for measurements of objects outside the solar system. If we focus a telescope on a star in summer and in winter, we might hope to determine these two angles accurately enough to be able to measure the distance to a star.

Astronomers are always inventing new ways of measuring distance. They find, for example, that they can estimate the size and brightness of a star by its color. The color and brightness of many nearby stars—whose distances are known by triangula- tion—have been measured, and it is found that there is a smooth relationship between the color and the intrinsic brightness of stars (in most cases). If one now measures the color of a distant star, one may use the color-brightness relationship to determine the intrinsic brightness of the star. By measuring how bright the star appears to us at the earth (or perhaps we should say how dim it appears), we can compute how far away it is. (For a given intrinsic brightness, the apparent brightness decreases with the

square of the distance.). Evidence supports the idea that galaxies are all about the same size.

Photographs of exceedingly distant galaxies have recently been obtained with the giant Palomar telescope. One is shown in Fig. 5-8. It is now believed that some of these galaxies are about halfway to the limit of the universe— meters away—the largest distance we can contemplate!

Short distances

With- out much difficulty we can mark off one thousand equal spaces which add up to one meter. With somewhat more difficulty, but in a similar way (using a good microscope), we can mark off a thousand equal subdivisions of the millimeter to make a scale of microns (millionths of a meter). It is difficult to continue to smaller scales, because we cannot "see" objects smaller than the wavelength of visible light (about meter).

from an observation of the way light of short wavelength (x-radiation) is reflected from a pattern of marks of known separation, we determine the wave- length of the light vibrations. Then, from the pattern of the scattering of the same light from a crystal, we can determine the relative location of the atoms in the crystal, obtaining results which agree with the atomic spacings also determined by chemical means. We find in this way that atoms have a diameter of about meter.

Measurement of a nuclear cross section can be made by passing a beam of high-energy particles through a thin slab of material and observing the number of particles which do not get through.

The chance that a very small particle will hit a nucleus on the trip through is just the total area covered by the profiles of the nuclei divided by the total area in the picture. Suppose that we know that in an area A of our slab of material there are N atoms (each with one nucleus, of course). Then the total area "covered" by the nuclei is . Now let the number of particles of our beam which arrive

at the slab be and the number which come out the other side be

. The fraction which do not get through is

which should just equal the fraction of the area covered. We can obtain the radius of the nucleus from the equation

From such an experiment we find that the radii of the nuclei are from about 1 to 6 times meter. The length unit

meter is called the fermi, in honor of Enrico Fermi (1901-1958).

It might be thought that it would be a good idea to use some natural length as our unit of length—say the radius of the earth or some fraction of it. The meter was originally intended to be such a unit and was defined to be times the earth's radius.

Perfectly precise measurements of distances or times are not permitted by the laws of nature. We have mentioned earlier that the errors in a measurement of the position of an object must be at least as large as

where is a small quantity called "Planck's constant" and ∆p is the error in our knowledge of the momentum (mass times velocity) of the object whose posi- tion we are measuring. It was also mentioned that the uncertainty in position measurements is related to the wave nature of particles.

The relativity of space and time implies that time measurements have also a minimum error, given in fact by

where ΔE is the error in our knowledge of the energy of the process whose time period we are measuring. If we wish to know more precisely when something happened we must know less about what happened, because our knowledge of the energy involved will be less. The time uncertainty is also related to the wave nature of matter.

Probability

Chance and likelihood

We can show that the expected value of

The expected value of

After N steps we have

The average expectation is then

We expect that for small ∆x the chance of D landing in the interval is proportional to ∆x, the width of the interval. So we can write

P(x, ∆x) = p(x) ∆x

The function p(x) is called the probability density.

For large N, p(x) is the same for all reasonable distributions in individual step lengths, and depends only on N. We plot p(x) for three values of N in Fig. 6-7. You will notice that the "half-widths" (typical spread from x = 0) of these curves is

You may notice also that the value of p(x) near zero is inversely proportional to

The normal or gaussian probability density. It has the mathematical form

where σ is called the standard deviation and is given, in our case, by .

We remarked earlier that the motion of a molecule, or of any particle, in a gas is like a random walk. Suppose we open a bottle of an organic compound and let some of its vapor escape into the air. If there are air currents, so that the air is circulating, the currents will also carry the vapor with them. But even in perfectly still air, the vapor will gradually spread out-will diffuse-until it has penetrated throughout the room. We might detect it by its color or odor. The individual molecules of the organic vapor spread out in still air because of the molecular motions caused by collisions with other molecules. If we know the average "step" size, and the number of steps taken per second, we can find the probability that one, or several, molecules will be found at some distance from their starting point after any particular passage of time.

The motion of a molecule, or of any particle, in a gas is like a random walk. Suppose we open a bottle of an organic compound and let some of its vapor escape into the air. If there are air currents, so that the air is circulating, the currents will also carry the vapor with them. But even in perfectly still air, the vapor will gradually spread out-will diffuse-until it has penetrated throughout the room. We might detect it by its color or odor. The individual molecules of the organic vapor spread out in still air because of the molecular motions caused by collisions with other molecules. If we know the average "step" size, and the number of steps taken per second, we can find the probability that one, or several, molecules will be found at some distance from their starting point after any particular passage of time.

The uncertainty principle

It is one of the fundamental results of quantum mechanics that the two functions

This equation is a statement of the Heisenberg uncertainty principle.

This equation says that if we try to "pin down" a particle by forcing it to be at a particular place, it ends up by having a high speed. Or if we try to force it to go very slowly, or at a precise velocity, it "spreads out" so that we do not know very well just where it is.

The necessary uncertainty in our specification of the position of a particle becomes most important when we wish to describe the structure of atoms. In the hydrogen atom, which has a nucleus of one proton with one electron outside of the nucleus, the uncertainty in the position of the electron is as large as the atom itself! Wecannot,therefore,properlyspeakoftheelectronmovinginsome"orbit" around the proton. The most we can say is that there is a certain chance p(r) ΔV, of observing the electron in an element of volume V at the distance r from the proton. The probability density p(r) is given by quantum mechanics. For an undisturbed hydrogen atom , which is a bell-shaped function like that in Fig. 6-8. The number a is the "typical" radius, where the function is decreasing rapidly. Since there is a small probability of finding the electron at distances from the nucleus much greater than a, we may think of a as "the radius of the atom," about 10^-10 meter.

Our best "picture" of a hydrogen atom is a nucleus surrounded by an "electron cloud" (although we really mean a "probability cloud"). The electron is there somewhere, but nature per- mits us to know only the chance of finding it at any particular place.

Planetary motion

Every object in the universe attracts every other object with a force which for any two bodies is proportional to the mass of each and varies inversely as the square of the distance between them. This state- ment can be expressed mathematically by the equation

An object responds to a force by accelerating in the direction of the force by an amount that is inversely proportional to the mass of the object.Tycho Brahe had an idea that was different from anything proposed by the ancients: his idea was that these debates about the nature of the motions of the planets would best be resolved if the actual positions of the planets in the sky were measured sufficiently accurately. If measurement showed exactly how the planets moved, then perhaps it would be possible to establish one or another viewpoint. This was a tremendous idea-that to find something out, it is better to perform some careful experiments than to carry on deep philosophical arguments. Pursuing this idea, Tycho Brahe studied the positions of the planets for many years in his observatory on the island of Hven, near Copenhagen. He made voluminous tables, which were then studied by the mathematician Kepler, after Tycho's death.

Kepler's laws

I. Each planet moves around the sun in an ellipse, with the sun at one focus.

II. The radius vector from the sun to the planet sweeps out equal areas in equal intervals of time.

III. The squares of the periods of any two planets are proportional to the cubes of the semimajor axes of their respective orbits:

Development of dynamics

Newton modified this idea, saying that the only way to change the motion of a body is to use force.

For example, if a stone is attached to a string and is whirling around in a circle, it takes a force to keep it in the circle. We have to pull on the string. In fact, the law is that the acceleration produced by the force is inversely proportional to the mass, or the force is proportional to the mass times the acceleration. The more massive a thing is, the stronger the force required to produce a given acceleration.

Newton's laws of gravitation

There must be a force, inversely as the square of the distance, directed in a line between the two objects.

Being a man of considerable feeling for generalities, Newton supposed, of course, that this relationship applied more generally than just to the sun holding the planets. It was already known, for example, that the planet Jupiter had moons going around it as the moon of the earth goes around the earth, and Newton felt certain that each planet held its moons with a force. He already knew of the force holding us on the earth, so he proposed that this was a universal force- that everything pulls everything else.

We can calculate from the radius of the moon's orbit (which is about 240,000 miles) and how long it takes to go around the earth (approximately 29 days), how far the moon moves in its orbit in 1 second, and can then calculate how far it falls in one second.* This distance turns out to be roughly 1/20 of an inch in a second.

Wishing to put this theory of gravitation to a test by similar calculations, Newton made his calculations very carefully and found a discrepancy so large that he regarded the theory as contradicted by facts, and did not publish his results. Six years later a new measurement of the size of the earth showed that the astronomers had been using an incorrect distance to the moon. When Newton heard of this, he made the calculation again, with the corrected figures, and obtained beautiful agreement.

the moon falls in the sense that it falls away from the straight line that it would pursue if there were no forces. Let us take an example on the surface of the earth. An object released near the earth's surface will fall 16 feet m the first second. An obJect shot out horizontally will also fall 16 feet; even though it is movmg honzontally, it still falls the same 16 feet in the same time.

if the bullet moves at 5 miles a second, it then will continue to fall toward the earth at the same rate of 16 feet each second, but will never get any closer because the earth keeps curving away from it.

the pull of the moon for the earth and for the water is "balanced" at the center. But the water which is closer to the moon is pulled more than the average and the water which is farther away from it is pulled less than the average. Furthermore, the water can flow while the more rigid earth cannot. The true picture is a combination of these two things.

the earth and the moon both go around a central position, each falling toward this common position, as shown in Fig. 7-5. This motion around the common center is what balances the fall of each. So the earth is not going in a straight hoe either; 1t travels in a circle. The water on the far side is "unbalanced" because the moon's attraction there is weaker than 1t is at the cemer of the earth, where it JUSt balances the "centnfugal force." The result of this imbalance 1s that the water rises up, away from the center of the earth. On the near side, the attrac- tion from the moon is stronger, and the imbalance is in the opposite direction m space, but agam away from the center of the earth. The net result 1s that we get two tidal bulges.

Universal gravitation

there was once a certain difficulty with the moons of Jupiter that is worth remarking on. These satellites were studied very carefully by Roemer, who noticed that the moons sometimes seemed to be ahead of schedule, and some- times behind. (One can find their schedules by waiting a very long time and finding out how long it takes on the average for the moons to go around.) Now they were ahead when Jupiter was particularly close to the earth and they were behind when Jupiter wasfarther from the earth. This would have been a very difficult thing to explain according to the law of gravitation-it would have been, in fact, the death ofthiswonderfultheoryiftherewerenootherexplanation. Ifalawdoesnotwork even in one place where it ought to, it is just wrong. But the reason for this dis- crepancy was very simple and beautiful: it takes a little while to see the moons of Jupiter because of the time it takes light to travel from Jupiter to the earth. When Jupiter is closer to the earth the time is a little less, and when it is farther from the earth, the time is more. This is why moons appear to be, on the average, a little ahead or a little behind, depending on whether they are closer to or farther from the earth. This phenomenon showed that light does not travel instantaneously, and furnished the first estimate of the speed of light. This was done in 1656.

Attempts were made to analyze the motions of Jupiter, Saturn, and Uranus on the basis of the law of gravitation. The effects of each of these planets on each other were calculated to see whether or not the tiny deviations and irregularities in these motions could be completely understood from this one law. Lo and behold, for Jupiter and Saturn, all was well, but Uranus was "weird." It behaved in a very peculiar manner. It was not travelling in an exact ellipse, but that was under- standable, because of the attractions of Jupiter and Saturn. But even if allowance were made for these attractions, Uranus still was not going right, so the laws of gravitation were in danger of being overturned, a possibility that could not be ruled out. Two men, Adams and Leverrier, in England and France, independently, arrived at another possibility: perhaps there is another planet, dark and invisible, which men had not seen. This planet, N, could pull on Uranus. They calculated where such a planet would have to be in order to cause the observed perturba- tions. They sent messages to the respective observatories, saying, "Gentlemen, point your tekscope to such and such a place, and you will see a new planet." It often depends on with whom you are working as to whether they pay any atten- tion to you or not. They did pay attention to Leverrier; they looked, and there planet N was! The other observatory then also looked very quickly in the next few days and saw it too.

Cavendish's experiemnt

All the masses and distances are known. You say, "We knew it already for the earth." Yes, but we did not know the mass of the earth. By knowing G from this experiment and by knowing how strongly the earth attracts, we can indirectly learnhowgreatisthemassoftheearth! Thisexperimenthasbeencalled"weighing the earth." Cavendish claimed he was weighing the earth, but what he was meas- uring was the coefficient G of the gravity law. This is the only way in which the mass of the earth can be determined. G turns out to be

let us consider the time it takes light to go across a proton,

It is very interesting that this force is exactly proportional to the mass with great precision, because if it were not exactly proportional there would be some effect by which inertia and weight would differ. The absence of such an effect has been checked with great accuracy by an experiment done first by Eotvos in 1909 and more recently by Dicke. For all substances tried, the masses and weights are exactly proportional within I part m 1,000,000,000, or less. This is a remarkable experiment.

Gravity and relativity

Newton's laws of dynamics

Momentum and forces

Galileo made a great advance in the understanding of motion when he discovered the principle of tnertia: If an object is left alone, is not disturbed, it continues to move with a constant velocity in a straight line if it was originally moving, or it continues to stand still if it was just standing still.

Newton wrote down three laws: The First Law was a mere restatement of the Galilean principle of inertia just described. The Second Law gave a specific way of determinmg how the velocity changes under different influences called forces. The Third Law describes the forces to some extent.

We use the term mass as a quantitative measure of inertia, and we may measure mass, for example, by swmgmg an object in a circle at a certain speed and measuring how much force we need to keep it in the circle. In this way we find a certain quantity of mass for every object. Now the momentum of an object is a product of two parts: its mass and its velocity. Thus Newton's Second Law may be written mathematically this way:

The time-rate-of-change of a quantity called momentum is proportional to the force. For a constant mass

The acceleration a is the rate of change of the velocity.

An object moving in a circle of radius R with a certain speed v along the circle falls away from a straightline path by a distance equal to if t is very small. Thus the formula for acceleration at right angles to the motion is

and a force F at right angles to the velocity will cause an object to move in a curved path whose radius of curvature is

.

Newton third law

Action equals reaction. Suppose we have two small bodies, say particles, and suppose that the first one exerts a force on the second one, pushing it with a certain force. Then, simultaneously, according to Newton's Third Law, the second particle will push on the first with an equal force, in the opposite direction; furthermore, these forces effectively act in the same line.

Conservation of momentum

Suppose, for simplicity, that we have just two interacting particles, possibly of different mass, and numbered I and 2. The forces between them are equal and opposite; what are the consequences? According to Newton's Second Law, force is the time rate of change of the momentum, so we conclude that the rate of change of momentum of particle I is equal to minus the rate of change of momentum

of particle 2. This means that if we add the momentum of particle I to the momentum of particle 2, the rate of change of the sum of these, due to the mutual forces (called internal forces) between particles, is zero

.

There is another interesting consequence of Newton's Second Law, to be proved later, but merely stated now. This principle is that the laws of physics will look the same whether we are standing still or moving with a uniform speed in a straight line.

Relativistic momentum

In the theory of relativity it turns out that we do have conservation of momentum; the particles have mass and the momentum is still given by mv, the mass times the velocity, but the mass changes with the velocity, hence the momentum also changes. The mass varies with velocity according to the law

where m0 is the mass of the body at rest and c is the speed of light. It is easy to see from the formula that there is negligible difference between m and m0 unless v is very large, and that for ordinary velocities the expression for momentum reduces to the old formula.

If an electrical charge at one location is suddenly moved, the effects on another charge, at another place, do not appear instantane- ously-there is a little delay.

It takes time for the influence to cross the intervening distance, which it does at 186,000 miles a second. In that tiny time themomentumoftheparticlesisnotconserved. Ofcourseafterthesecondcharge has felt the effect of the first one and all is quieted down, the momentum equation will check out all right, but during that small interval momentum is not conserved. We represent this by saying that during this interval there is another kind of mo- mentum besides that of the particle, ml!, and that is momentum in the electro- magnetic field. If we add the field momentum to the momentum of the particles, then momentum is conserved at any moment all the time.

To take another example: an electromagnetic field has waves, which we call light; it turns out that light also carries momentum with it, so when light impinges on an object it carries in a certain amount of momentum per second; this is equivalent to a force, because if the illuminated object is picking up a certain amount of momentum per second, its momentum is changing and the situation is exactly the same as if there were a force on it.

In quantum mechanics the difference is that when the particles are represented as particles, the momentum is still mv, but when the particles are represented as waves, the momentum is measured by the number of waves per centimeter: the greater this number of waves, the greater the momen- tum. In spite of the differences, the law of conservation of momentum holds also in quantum mechanics.

Symmetry in Physics

Professor Hermann Weyl has given this definition of symmetry: a thing is symmetrical if one can subject it to a certain operation and it appears exactly the same after the operation.

The laws of physics are symmetrical for translational displacements and rotations. It should make no difference in which direction we choose the axes.

Friction

The frictional force is proportional to the normal force, and has a more or less constant coefficient; that is,

F=μN

where μ is called the coefficient of friction. The coefficient of friction is independent of the weight.

That the formula F=μN is approximately correct can be demonstrated by a simple experiment. We set up a plane, inclined at a small angle θ, and place a block of weight WW on the plane. We then tilt the plane at a steeper angle, until the block just begins to slide from its own weight. The component of the weight downward along the plane is Wsinθ , and this must equal the frictional force F when the block is sliding uniformly. The component of the weight normal to the plane is Wcosθ , and this is the normal force N. With these values, the formula becomes Wsinθ=μWcosθ , from which we get μ= tanθ. If this law were exactly true, an object would start to slide at some definite inclination. If the same block is loaded by putting extra weight on it, then, although W is increased, all the forces in the formula are increased in the same proportion, and W cancels out.

Molecular forces

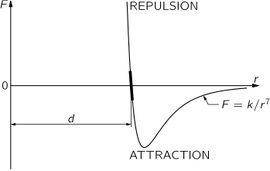

The force between atoms is illustrated schematically in the Figure, where the force F between two atoms is plotted as a function of the distance r between them. There are different cases: in the water molecule, for example, the negative charges sit more on the oxygen, and the mean positions of the negative charges and of the positive charges are not at the same point; consequently, another molecule nearby feels a relatively large force, which is called a dipole-dipole force. However, for many systems the charges are very much better balanced, in particular for oxygen gas, which is perfectly symmetrical. In this case, although the minus charges and the plus charges are dispersed over the molecule, the distribution is such that the center of the minus charges and the center of the plus charges coincide. A molecule where the centers do not coincide is called a polar molecule, and charge times the separation between centers is called the dipole moment. A nonpolar molecule is one in which the centers of the charges coincide. For all nonpolar molecules, in which all the electrical forces are neutralized, it nevertheless turns out that the force at very large distances IS an attraction and varies inversely as the seventh power of the distance, or , where k is a constant that depends on the molecules.

Since the molecular forces attract at large distances and repel at short dis- tances, as shown in Figure, we can make up solids in which all the atoms are held together by their attractions and held apart by the repulsion that sets in when they are too close together.

Fundamental forces fields

If any two bodies are electrically charged, there is an electrical force between them, and if the magnitudes of the charges are q1 and q2, respectively, the force varies inversely as the square of the distance between the charges, or

with . For unlike charges, this law is like the law of gravitation, but for like charges the force is repulsive and the sign (direction) is reversed.

The most important charge of all is the charge on a single electron, which is coulomb.

The combination occurs frequently, and to simplify calculations it has been defined by the symbol

; its numerical value in the mks system of units turns out to be

. The advantage of using the constant in this form is that the force between two electrons in newtons can then be written simply as

.

To analyze the force by means of the field concept, we say that the charge at P produces a "condition" at R, such that when the charge

is placed at R it "feels" the force. E is the "condition" produced by qb we say, and F is the response of

to E. E is called an electric field, and it is a vector. In the case of gravitation, we can do exactly the same thing. The force on

is the mass

times the field C produced by

; that is,

.

The total electric field produced by a number of sources is the vector sum of the electric fields produced by the first source, the second source, and so on.

Closely related to electrical force is another kind, called magnetic force, and this too is analyzed in terms of a field.

The first part of the experiment is to apply a negative voltage to the lower plate, which means that extra electrons have been placed on the lower plate. Since like charges repel, the light spot on the screen instantly shifts upward. (We could also say this in another way-that the electrons "felt" the field, and responded by deflecting upward.) We next reverse the voltage, making the upper plate negative. The light spot on the screen now jumps below the center, showing that the electrons in the beam were repelled by those in the plate above them. (Or we could say again that the electrons had "responded" to the field, which is now in the reverse direction.)

The second part of the experiment is to disconnect the voltage from the plates and test the effect of a magnetic field on the electron beam. This is done by means of a horseshoe magnet, whose poles are far enough apart to more or less straddle the tube. Suppose we hold the magnet below the tube in the same orienta- tion as the letter U, with its poles up and part of the tube in between. We note that the light spot is deflected, say, upward, as the magnet approaches the tube from below. So it appears that the magnet repels the electron beam. However, it is not that simple, for if we invert the magnet without reversing the poles side-for- side, and now approach the tube from above, the spot still moves upward, so the electron beam is not repelled; instead, it appears to be attracted this time. Now we start again, restoring the magnet to its original U orientation and holding it below the tube, as before. Yes, the spot is still deflected upward; but now turn the magnet ISO degrees around a vertical axis, so that it is still in the U position but the poles are reversed side-for-side. Behold, the spot now jumps downward, and stays down, even if we invert the magnet and approach from above, as before.

Across the magnet from one pole to the other there is a magnetic field. This field has a direction which is always away from one particular pole (which we could mark) and toward the other. Inverting the magnet did not change the direction of the field, but reversing the poles side-for-side did reverse its direction. For example, if the electron velocity were horizontal in the x-direction and the magnetic field were also horizontal but in they-direction, the magnetic force on the moving electrons would be in the z-direction, i.e., up or down, depending on whether the field was in the positive or negative y-direction.

The force on a charged object depends upon its motion; if, when the object is standing still at a given place, there is some force, this is taken to be proportional to the charge, the coefficient being what we call the electric field. When the object moves the force may be different, and the correction, the new "piece" of force, turns out to be dependent exactly linearly on the velocity, but at right angles to v and to another vector quantity which we call the magnetic induction B.

The total electric and magnetic force on a moving charge q has the components

Pseudo forces

The laws of force as looked upon by Moe would appear as

That is, since Moe's coordinate system is accelerating with respect to Joe's.

Another example of pseudo force is what is often called "centrifugal force." An observer in a rotating coordmate system, e.g., in a rotating box, will find mysterious forces, not accounted for by any known origin of force, throwing things outward toward the walls.

One very important feature of pseudo forces is that they are always propor- tional to the masses; the same is true of gravity.

Einstein put forward the famous hypothesis that accelerations give an imita- tion of gravitation, that the forces of acceleration (the pseudo forces) cannot be distinguished from those of gravity; it is not possible to tell how much of a given force is gravity and how much is pseudo force.

Suppose that we all lived in two dimensions, and knew nothing of a third. We think we are on a plane, but suppose we are really on the surface of a sphere. And suppose that we shoot an object along the ground, with no forces on it. Where will it go? It will appear to go in a straight line, but it has to remain on the surface of a sphere, where the shortest distance between two points is along a great circle; so it goes along a great circle. If we shoot another object similarly, but in another direction, it goes along another great circle. Because we think we are on a plane, we expect that these two bodies will continue to diverge linearly with time, but careful observation will show that if they go far enough they move closer together again, as though they were attracting each other. But they are rzot attracting each other-there is just something "weird" about this geometry. This particular illustration does not describe correctly the way in which Euclid's geometry is "weird," but it illustrates that if we distort the geometry sufficiently it is possible that all gravitation is related in some way to pseudo forces; that is the general idea of the Einsteinian theory of gravitation.

Nuclear forces

Forces within a nucleus do not vary inversely as the square of the distance, but die off exponentially over a certain distance r, as expressed by

, where the distance

is of the order of to

centimeters.

Energy of a falling body

An object which changes its height under the influence of gravity alone has a kinetic energy T=m v^2/2 due to its motion during the fall, and a potential energy mgh, abbreviated U=mgh., whose sum is constant:

As a result, the closer the planet is to the sun, the faster it is going. The rate of change of kinetic energy of an object zs equal to the power expended by the forces acting on it.

The work done by the force on the object is defined by the integral

Work is measured in newton · meters. A newton-meter is called a Joule; work is measured in joules. Power, then, is joules per second, and that is also called a watt.

For example the work done by the gravity force in bringing a body from a point at a distance r1 to a point at the distance r2 is

When there are friction forces the conservation of energy seems at first sight to be invalid. We have to find another form of energy. It turns out, in fact, that heat is generated in an object when it rubs another with friction.

Only the component of force in the direction of the displacement does any work.

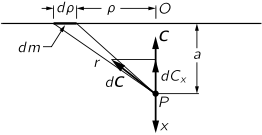

Gravitational field produced by mass distributions.

The gravitational field produced by an infinite sheet of material with constant density of mass μ at a distance "a" is given by the integral of

along the sheet. It points along the direction orthogonal to the sheet, let us say z, and is given by

The force is independent of distance a!

The force produced by the earth at a point on the surface or outside it is the same as if all the mass of the earth were located at its center.

Conservative forces

In general the work depends upon the curve, but in special cases it does not. If it does not depend up011 the curve, we say that the force is a conservative force.

If only conservative forces act, the kinetic energy T plus thepotential energy U remains constant:

T + U = constant.

All the fundamental forces in nature appear to be conservative.

The special theory of relativity

The special theory of relativity

The principle of relativity

The principle of relativity was first stated by Newton, in one of his corollaries to the laws of motion: "The motions of bodies included in a given space are the same among themselves, whether that space is at rest or moves uniformly forward in a straight line."

Suppose that Moe is moving in the x-direction with a uniform velocity u, and he measures the position of a certain point. He designates the "x-distance" of the point in his coordinate system as x'. Joe is at rest, and measures the position of the same point, designating its x-coordinate in his system as x. The relationship of the coordinates in the two systems is

x'=x- ut

t'=t *

Newton's equations are invariant under these transformations. In the past century interest in it was heightened as the result of investigations into the phenomena of electricity, magnetism, and light. A long series of careful studies of these phenomena by many people culminated in Maxwell's equations of the electromagnetic field, which describe electricity, magnetism, and light in one uniform system. However, the Maxwell equations did not seem to obey the principle of relativity. That is, if we transform Maxwell's equations by the substitution of equations *, their form does not remain the same; therefore, in a moving space ship the electrical and optical phenomena should be different from those in a stationary ship.

Suppose we are riding in a car that is going at a speed u, and light from the rear is going past the car with speed c. According to the Galilean transformation the apparent speed of the passing light, as we measure it in the car, should not be c but should be c - u. In any case, by measuring the speed of the light going past the car (if the Galilean transformation is correct for light), one could determine the speed of the car. A number of experiments based on this general idea were performed to determine the velocity of the earth, but they all failed-they gave no velocity at all.

The Lorentz transformation

In the meantime, H. A. Lorentz noticed a remarkable and curious thing when he made the following substitutions in the Maxwell equations:

(2)

namely, Maxwell's equations remain in the same form when this transformation is applied to them! Equations (2) are known as a Lorentz transformation. Notice that these transformations preserved the 4-distance

Einstein, following a suggestion originally made by Poincare, then proposed that all the physical laws should be of such a kind that they remain unchanged under a Lorentz transformation. In other words, we should change, not the laws of electrodynamics, but the laws of mechanics. How shall we change Newton's laws so that they will remain unchanged by the Lorentz transformation?

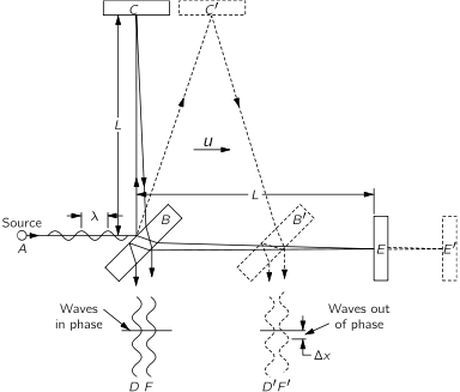

The Michelson-Morley experiment

The most famous of these experiments is one performed by Michelson and Morley in 1887. It was 18 years later before the negative results of the experiment were finally explained, by Einstein.

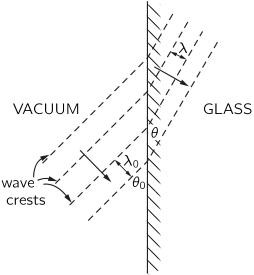

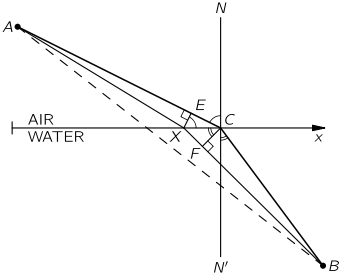

The Michelson-Morley experiment was performed with an apparatus like that shown in the Figure. This apparatus is essentially comprised of a light source A, a partially silvered glass plate B, and two mirrors C and E, all mounted on a rigid base. The mirrors are placed at equal distances L from B. The plate B splits an oncoming beam of light, and the two resulting beams continue in mutually perpendicular directions to the mirrors, where they are reflected back to B. On arriving back at B, the two beams are recombined as two superposed beams, D and F. If the time taken for the light to go from B to E and back is the same as the time from B to C and back, the emerging beams D and F will be in phase and will reinforce each other, but if the two times differ slightly, the beams will be slightly out of phase and interference will result. If the apparatus is "at rest" in the ether, the times should be precisely equal, but if it is moving toward the right with a velocity u, there should be a difference in the times.

Let us calculate the time required for the light to go from B to E and back. Let us say that the time for light to go from plate B to mirror E is and the time for the return is

Our second calculation will be of the time for the light to go from B to the mirror C. As before, during time

the mirror C moves to the right a distance

to the position C′; in the same time, the light travels a distance c t3 along the hypotenuse of a triangle, which is BC′. For this right triangle we have

For the return trip from C' the distance is the same, as can be seen from the symmetry of the figure; therefore the return time is also the same, and the total time is .

We conclude that the time to go to C and back is a little less than the time to E and back, even though the mirrors are equidistant from B, and all we have to do is to measure that difference with precision.

In carrying out the experiment, Michelson and Morley oriented the apparatus so that the line BE was nearly parallel to the earth's motion in Its orbit (at certain times of the day and night). This orbital speed is about 18 miles per second, and any "ether drift" should be at least that much at some time of the day or night and at some time during the year. The apparatus was amply sensitive to observe such an effect, but no time difference was found-the velocity of the earth through the ether could not be detected. The result of the experiment was null.

The result of the Michelson-Morley experiment was very puzzling and most disturbing. The first fruitful idea for finding a way out of the impasse came from Lorentz. He suggested that material bodies contract when they are moving, and that this foreshortening is only in the direction of the motion, and also, that if the length is L 0 when a body is at rest, then when it moves with speed u parallel to its length, the new length, which we call Lp, is given by

When this modification is applied to the Michelson-Morley interferometer appara- tus the distance from B to C does not change, but the distance from B to E is shortened and

The transformation of time

In checking out whether the contraction idea is in harmony with the facts in other experiments, it turns out that everything is correct provided that the times are also modified, in the manner expressed in the equation **. In other words, when an outsider sees a man in a space ship lighting a cigar, all the actions appear to be slower than normal, while to the man inside, everything moves at a normal rate. So not only must the lengths shorten, but also the time-measuring instruments ("clocks") must apparently slow down.

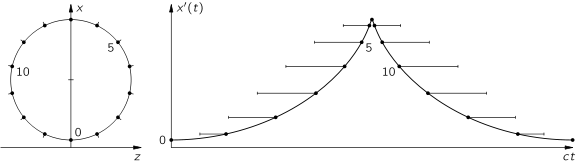

To test the time dilation we choose is rather a silly kind of clock: it is a rod (meter stick) with a mirror at each end, and when we start a light signal between the mirrors, the light keeps going up and down, making a click every time it comes down, like a standard ticking clock. We build two such clocks, with exactly the same lengths, and synchronize them by starting them together; then they agree always thereafter, because they are the same in length, and light always travels with speed c. We give one of these clocks to a man to take along in his space ship, and he mounts the rod perpendicular to the direction of motion of the ship; then the length of the rod will not change. When the external observer looks at the clock going by, he sees that the light, in going from mirror to mirror, is "really" taking a zigzag path, since the rod is moving sidewise all the while. If in a given time the rod moves forward a distance proportional to u, the distance the light travels in the same time is proportional to c, and the vertical distance is therefore proportional to . That is, it takes a longer time for light to go from end to end in the moving clock than in the stationary clock.

A very interesting example of the slowing of time with motion is furnished by mu-mesons (muons), which are particles that disintegrate spontaneously after an average lifetime of sec. They come to the earth in cosmic rays, and can also be produced artificially in the laboratory. Some of them disintegrate in midair, but the remainder disintegrate only after they encounter a piece of material and stop. It is clear that in its short lifetime a muon cannot travel, even at the speed of light, much more than 600 meters. But although the muons are created at the top of the atmosphere, some 10 kilometers up, yet they are actually found in a laboratory down here, in cosmic rays. How can that be? The answer is that different muons move at various speeds, some of which are very close to the speed of light. While from their own point of view they live only about 2 μsec, from our point of view they live considerably longer—enough longer that they may reach the earth. The factor by which the time is increased has already been given as

.

Simultaneisty

Events that occur at two separated places at the same time, as seen by Moe inS', do not happen at the same time as viewed by Joe in S.

Suppose that a man moving in a space ship (system S1) has placed a clock at each end of the ship and is interested in making sure that the, two clocks are in synchronism. How can the clocks be synchronized? There are many ways. One way, involving very little calculation, would be first to locate exactly the midpoint between the clocks. Then from this station we send out a light signal which will go both ways at the same speed and will arrive at both clocks, clearly, at the same time. This simultaneous arrival of the signals can be used to syn- chronize the clocks. Let us then suppose that the man in S1 synchronizes his clocks by this particular method. Let us see whether an observer in system S would agree that the two clocks are synchronous. The man in S1 has a right to believe they are, because he does not know that he is moving. But the man in S reasons that since the ship is moving forward, the clock in the front end was running away from the light signal, hence the light had to go more than halfway in order to catch up; the rear clock, however, was advancing to meet the light signal, so this distance was shorter. Therefore the signal reached the rear clock first, although the man in S1 thought that the signals arrived simultaneously. We thus see that when a man in a space ship thinks the times at two locations are simultaneous, equal values of t1 in his coordinate system must correspond to different values of t in the other coordinate system!

Relativistic mechanics

In the Einstein theory of relativity, the Newton's laws are still valid but the mass of a body depends on its velocity:

where the "rest mass" m0 represents the mass of a body that is not moving and c is the speed of light, which is about . When this change is made, Newton's laws and the laws of electrodynamics will harmonize. It is easy to see that this mass increase is very small in ordinary circumstances when v<<c.

What happens if a constant force acts on a body for a long time? In Newtonian mechanics the body keeps picking up speed until it goes faster than light. But this is impossible in relativistic mechanics. In relativity, the body keeps picking up, not speed, but momentum, which can continually increase because the mass is increasing. After a while there is practically no acceleration in the sense of a change of velocity, but the momentum continues to increase.

The mass of the electrons in the synchrotron is 2000 times as great as their normal mass, and is as great as that of a proton!

At small velocities

with the Kinetic energy. The energy of a body always equals

!

The twin paradox

Poincare made the following statement of the principle of relativity: "Accord- ing to the principle of relativity, the laws of physical phenomena must be the same for a fixed observer as for an observer who has a uniform motion of translation relative to him, so that we have not, nor can we possibly have, any means of discerning whether or not we are carried along in such a motion."

We consider a famous so-called "paradox" of Peter and Paul, who are supposed to be twins, born at the same time. When they are old enough to drive a space ship, Paul flies away at very high speed. Because Peter, who is left on the ground, sees Paul going so fast, all of Paul's clocks appear to go slower, his heart beats go slower, his thoughts go slower, everything goes slower, from Peter's point of view. Of course, Paul notices nothing unusual, but if he travels around and about for a while and then comes back, he will be younger than Peter, the man on the ground! That is actually right; it is one of the consequences of the theory of relativity which has been clearly demonstrated. Just as the mu-mesons last longer when they are moving, so also will Paul last longer when he is moving. This is called a "paradox" only by the people who believe that the principle of relativity means that all motion is relative; they say, "Heh, heh, heh, from the point of view of Paul, can't we say that Peter was moving and should therefore appear to age more slowly? By symmetry, the only possible result is that both should be the same age when they meet." But in order for them to come back together and make the comparison, Paul must either stop at the end of the trip and make a comparison of clocks or, more simply, he has to come back, and the one who comes back must be the man who was moving, and he knows this, because he had to turn around. When he turned around, all kinds of unusual things happened in his space ship-the rockets went off, things jammed up against one wall, and so on - while Peter felt nothing.

So the way to state the rule is to say that the man who hasfelt the accelerations, who has seen things fall against the walls, and so on, is the one who would be the younger; that is the difference between them in an "absolute" sense, and it is certainly correct.

Although no one has arranged an experiment explicitly so that we can get rid of the paradox, one could compare a mu-meson which is left standing with one that had gone around a complete circle, and it would surely be found that the one that went around the circle lasted longer.

Transformation of velocities

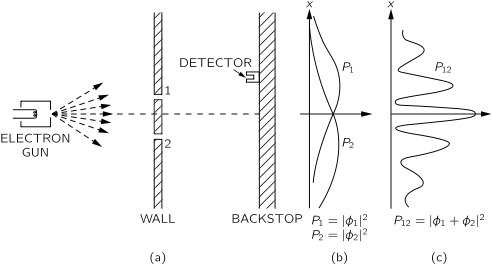

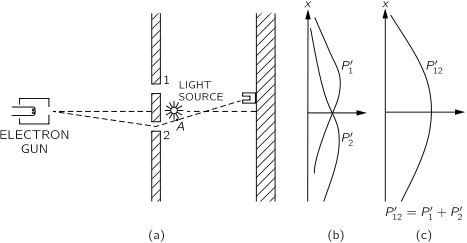

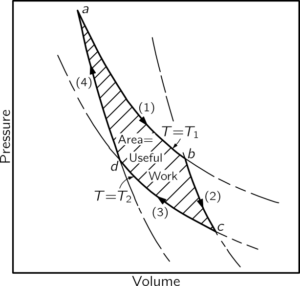

Using the inverse of Lorentz transformations, one finds that velocities measure in two different reference systems are related via